The research and open-source platforms developed at Sky-Lab would not be possible without the generous support of our sponsors.

We are sincerely grateful for their vital contributions.

Research Examples

- Autonomous Transportation and Cyber-Physical Systems (CPS) Testbeds

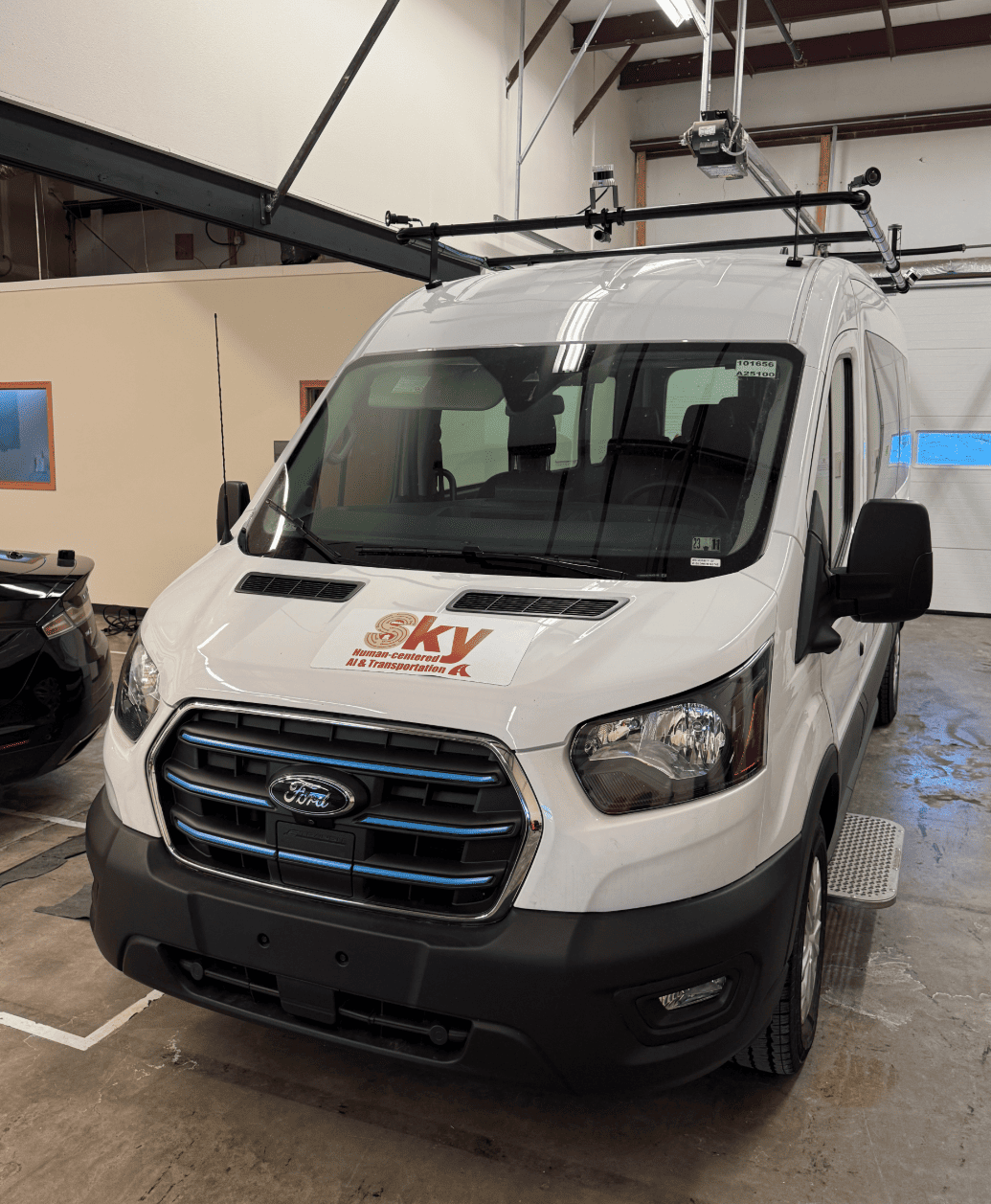

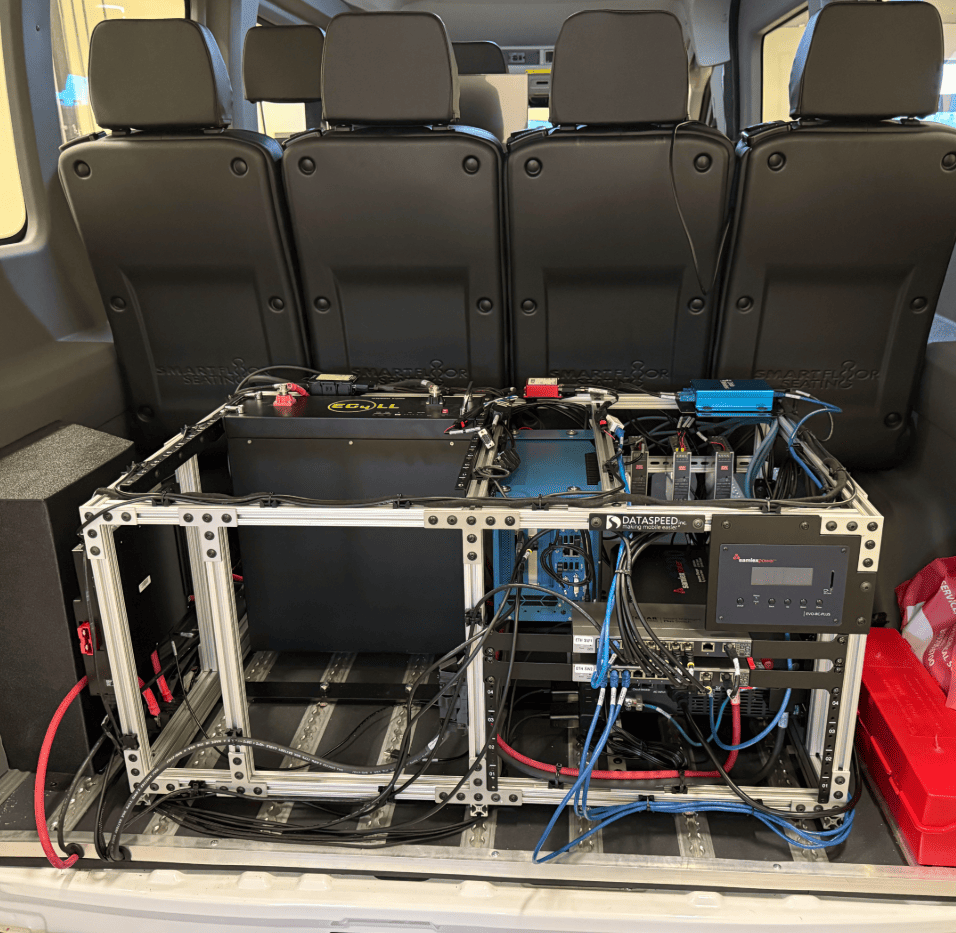

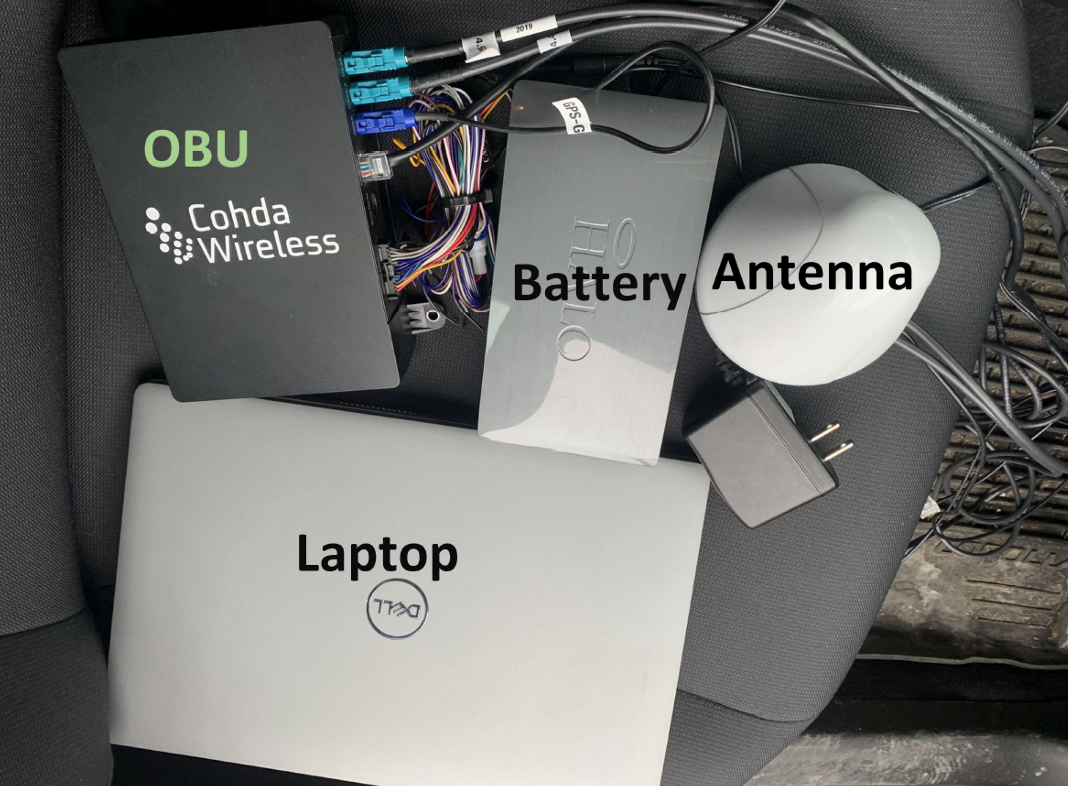

Sky-Lab has an electric connected and autonomous van, a Ford E-Transit retrofitted with a drive-by-wire system, sensors, onboard units, 5G connectivity for remote control and data sharing, and a high-performance computer (e.g., 3 LiDARs, 7 cameras, radars, NETGEAR Nighthawk M6 Pro 5G mobile hotspot, Siemens RSUs, Cohda MK6C OBU, etc.). Sky-Lab also has several sets of portable roadside units and traffic signals, which can be used to form an array of customizable traffic control systems at an intersection or along a corridor. This vehicle and equipment together serve as Cyber-Physical Systems (CPS) testbeds for developing and testing advanced technologies, such as vehicle-to-everything (V2X) and vehicle-to-infrastructure (V2I) communication, sensor fusion, autonomous driving algorithms, AR/VR-enabled digital twin platforms, and foundation AI model deployment.

Sky-Lab hosts high-performance Lambda Servers, which feature multiple Nvidia GPUs, including A6000, RTX 4090, and 4080. We are also deeply grateful to Nvidia, which has donated two RTX PRO 6000 Blackwell GPUs and 20,000 GPU hours on A100 clusters to the lab.

Live demo at 2025 AAA Safe Mobility Conference, Madison, WI

End-to-end autonomous driving in urban and rural environments

- Remote Vehicle Operations and Cloud-Based AI Deployment

Our team has engineered a complete remote operations (teleoperation) platform for our autonomous van. Leveraging its 5G connectivity, the vehicle can be controlled in real-time for driving, operation, and data collection from any location with an internet connection, such as a conference room or an off-site lab (see video examples below). More importantly, our platform is designed for remote AI model deployment. This enables a powerful hardware-in-the-loop workflow: real-time sensor data is streamed from the vehicle to a local computer or a cloud service like Amazon Web Services (AWS), where AI models can process the data and transmit control commands back to the vehicle for immediate execution. We welcome collaboration with partners from industry, academia, and public agencies. Please contact us to discuss how our platform can help you remotely develop, deploy, and test your models, or to explore other forms of partnership.

Remote driving from the office. The van can be seen passing the window on the right at 0:02 and 0:42.

Students testing remote driving function with Logitech G923 Racing Wheel and Pedals

- Sky-Drive: An Evolving Open-Source Project

Open-source code will be released on this website. Stay tuned!

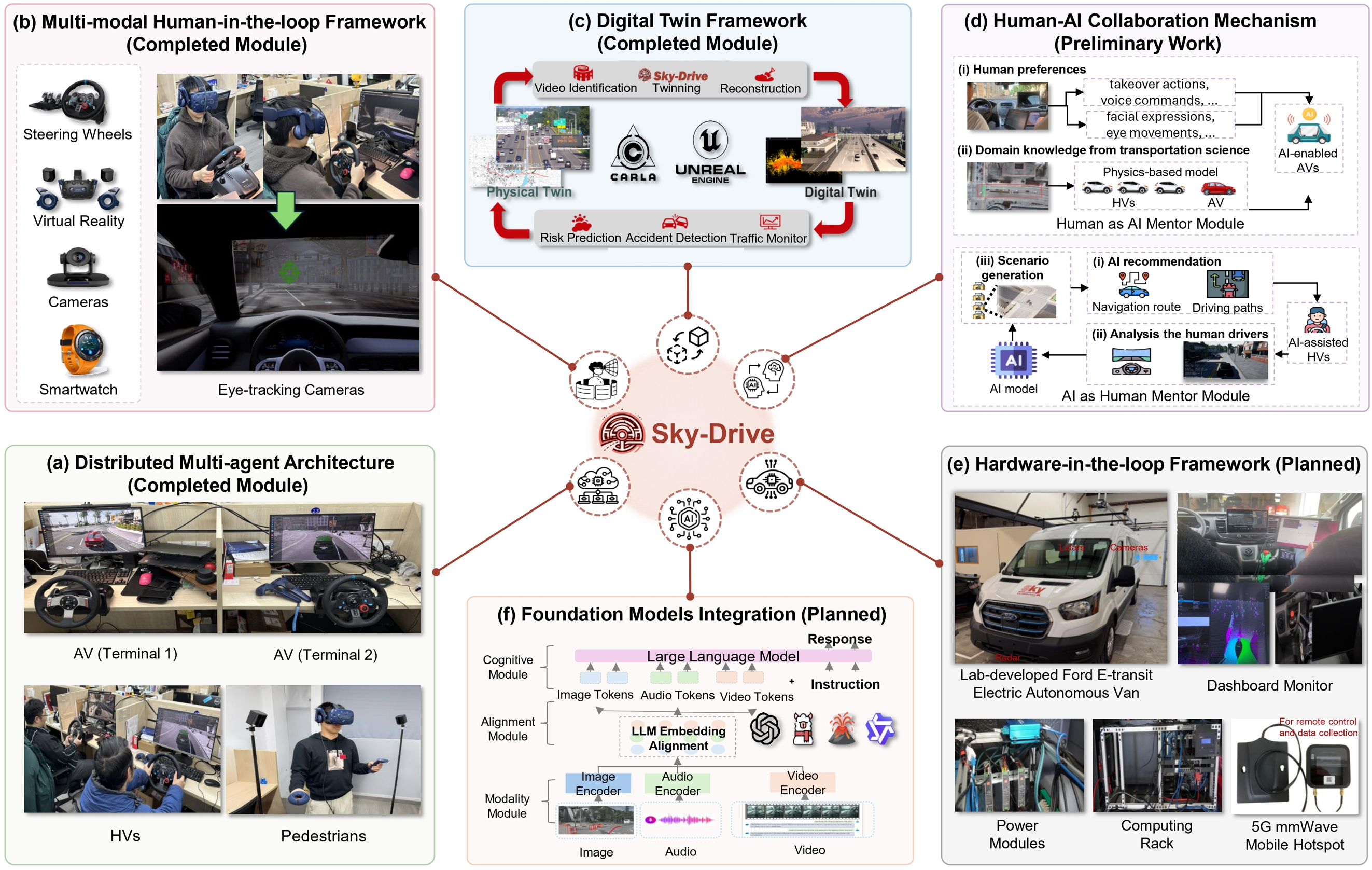

This has been a long-term initiative of our lab since in summer 2023. We have made significant progress, as detailed in our recent publication "Sky-Drive: A Distributed Multi-Agent Simulation Platform for Human-AI Collaborative and Socially-Aware Future Transportation" Journal of Intelligent and Connected Vehicles (accepted, in-press). Sky-Drive enables distributed, multi-agent human-AI interaction by integrating Unreal Engine, CARLA, ROS, WebSocket, and custom scenario generation pipelines. It supports real-time behavior modeling of pedestrians, human-driven vehicles (HVs), and autonomous vehicles (AVs), along with collaborative testing across multiple terminals. A core component of the Sky-Drive platform is the Sky-Lab, which provides a full Human/Hardware-in-the-Loop (HiL) setup for immersive simulation and behavioral analysis. This setup includes specialized equipment to create a realistic and data-rich testing environment:

- VR Headsets: The HTC Vive Pro Eye (with eye-tracking) and Meta Quest Pro are used to create immersive experiences and explore new possibilities in human-AI-machine interaction.

- Human/Hardware-in-the-Loop (HiL) System: This system enables researchers to test and validate control systems, algorithms, and human-machine interfaces in a safe and controlled environment. By integrating hardware components and human input into the loop, researchers can evaluate the performance and reliability of their designs under realistic conditions.

- Synchronization and Driving with Logitech Racing Wheel & Pedals: This enhances the realism of driving simulations and is designed to synchronize the simulation with the remote driving of our real-world autonomous van (a planned feature), allowing for more accurate studies of human behavior and interaction in vehicle-related research.

Sky-Drive demo: distributed, multi-agent VR experiment

Sky-Drive overview

Outreach Activities

We connect our research to the real world through our Cyber-Physical Systems (CPS) platform, which combines our open-source Sky-Drive platform and our lab’s full-scale autonomous van (both software and hardware). This integrated system enables a complete pipeline from simulation to on-road testing. We regularly demonstrate our progress to professionals from industry, academia, and public agencies to ensure our work meets real-world needs.

- Live demo at 2025 AAA Safe Mobility Conference, Madison, WI

We hosted a live demonstration of our electric autonomous van for over 50 conference attendees from industry, academia, and public agencies in the Madison Capitol area. The demo showcased our integrated software and hardware systems and featured our in-house developed technologies, including autonomous driving, path following, crash avoidance, and traffic signal recognition.

- Live demo at 2025 Wisconsin Automated Vehicle External (WAVE) Advisory Committee Meeting

We had the privilege of presenting our latest research on connected and automated vehicles (CAVs) to a distinguished audience, including WisDOT Deputy Secretary Scott Lawry, his colleagues at WisDOT, and partners from industry and academia. The presentation was followed by a live demonstration of our vehicle's capabilities, which took place after the workshop "CAV Technologies and Applications: A Transportation Stakeholders Resource Guide".